Update 4/20/2021: LiDAR has become significantly more power and cost efficient (as we predicted), but our core argument stays the same: to unlock autonomous driving, the car must be able to safely handle the entire spectrum of cases one might encounter. The solution requires copious amounts of real-world camera data, which enables quick and reliable processing of and response to real-time information. To Tesla’s benefit, competitor companies have focused on LiDAR at the expense of gathering data, giving Tesla a multi-year lead in building “the brain.”

Update 6/14/2021: Tesla has removed radar from its new Model 3/Y cars starting in May 2021. In our article, Why radar is doomed, we discuss the strengths and weaknesses of radar, as well as how a Tesla car can still drive safely without radar.

---

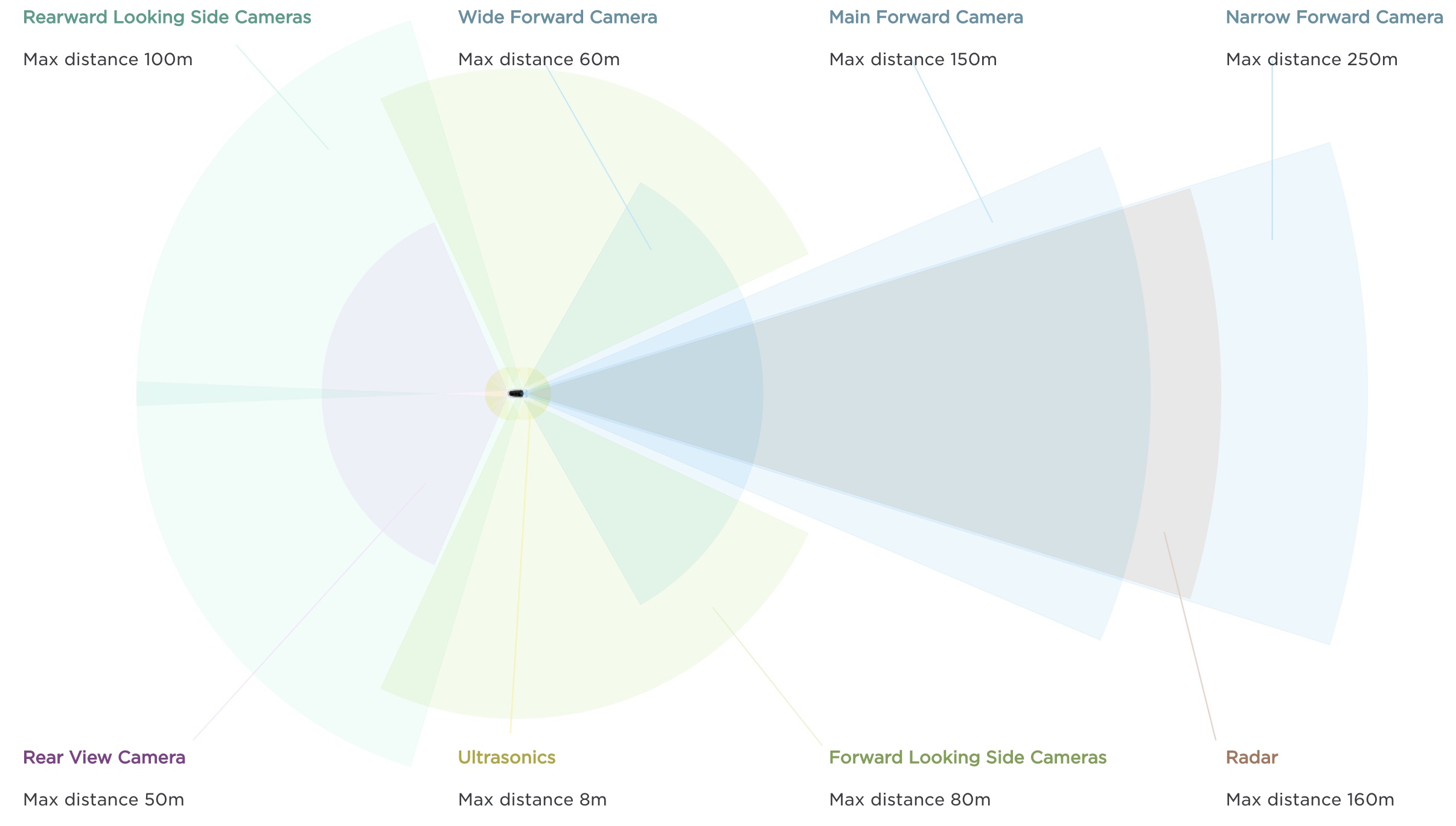

In typical Musk fashion, Tesla is a bit of an outlier for developing autonomous cars that will not use LiDAR (Light Detection and Ranging) as one of its sensors, while many others insist LiDAR is absolutely necessary for autonomous driving. Instead of LiDAR, Tesla uses a combination of cameras to cover 360° around the car, radar (Radio Detection and Ranging) to complement the object detection of cameras in adverse weather conditions, and ultrasonic sensors for detecting objects very close to the car (up to 8m or 26 feet away).

Musk has become infamous for his bold statements against LiDAR, none more strong than his statement at Tesla's Autonomy Day in 2019:

"LiDAR is a fool’s errand. Anyone relying on LiDAR is doomed. Doomed! [They are] expensive sensors that are unnecessary. It’s like having a whole bunch of expensive appendices. Like, one appendix is bad, well now you have a whole bunch of them, it’s ridiculous, you’ll see.” (Tesla Autonomy Day YouTube video, 1:41:23-1:41:50)

Autopilot Review has compiled clips of all statements against LiDAR from Tesla’s Autonomy Day by Musk and the Director of AI at Tesla, Andrej Karpathy.

NOTE: Technically, Tesla is not the only company developing autonomous cars that reject the use of LiDAR. George Hotz of Comma.AI also believes that LiDAR is unnecessary and unable to solve the problem of perception/vision. Torquenews summarizes Hotz’ criticism of LiDAR: “According to [Hotz], these companies are doomed to fail since they use LiDAR to localize their driving to centimeters. This means rather than their AIs looking at a scene and deciding where to go, the companies pre-map their routes and use LiDAR to localize their location within centimeters so that the vehicles can follow it” (emphases added).

Basic Arguments Against LiDAR:

Expensive, Useless in Bad Weather, Power Hungry, and Ugly

1. LiDAR is expensive -- both initial costs and maintenance costs. Even though Google’s Waymo has been able to reduce the cost of LiDAR by 90% from $75,000 (in 2009) to $7,500 (in 2017), the need for three LiDAR systems means that LiDAR will be unable to provide a low-cost robotaxi fleet or gain widespread market penetration any time soon. In fact, Waymo’s fifth generation car introduced in March 2020, increased the number of LiDAR sensors from three to four, further increasing costs. Waymo refuses to be transparent about the cost of its cars, but an estimate from 2017 suggests an autonomous car equipped with LiDAR might cost $250,000, while a more recent article estimates the cost at about $180,000.

In contrast, Tesla’s $38,000 Model 3, with a $10,000 full-self driving (FSD) add-on, already has all the computer hardware and sensors necessary for autonomous driving. All we are waiting for is a version of Tesla’s neural network software that is 10x safer than a human driver.

Furthermore, we must also account for the cost of maintenance. Because LiDAR uses moving parts, it is easier for it to break or malfunction and, thus, more expensive to maintain. Radar has no moving parts and is cheap to replace.

Wright’s Law tells us that all new technologies eventually drop in price, but we believe that long before LiDAR drops enough in price to compete with Tesla’s low-cost robotaxi fleet, Tesla will already have won the race.

2. LiDAR has difficulties in bad weather conditions. Since LiDAR uses visible lasers in order to measure distance, LiDAR is unable to work well in bad weather conditions, such as heavy rain, snow, and fog -- whereas radar still works in such conditions. LiDAR is essentially blind in bad weather. For this reason, autonomous cars using LiDAR still have to use radar to drive in such bad weather conditions, further adding to autonomous vehicle costs.

If it only operates in good weather, like Waymo's autonomous driving program that operates in certain parts of Phoenix, Arizona, then why have LiDAR at all? As cameras can already infer distance and see quite well in good weather.

3. LiDAR is power hungry, needing more power than other sensors. Out of all sensors, LiDAR requires the most power, which has the effect of meaningfully decreasing the car’s driving range. More frequent recharging and higher charging costs could be devastating to a low-cost robotaxi fleet and a major turn-off for consumers, who don't want the extra cost and inconvenience.

4. LiDAR is ugly. While this does not matter from an engineering or safety perspective, it does matter from a consumer’s perspective when choosing a car. There is no way around how ugly LiDAR sensors look when they are placed atop a car.

Don't forget: Elon Musk is literally a rocket scientist . . .

Before we begin to answer this question, whether LiDAR is even necessary, we must begin with an obvious, but often overlooked point: Elon Musk and his engineers at Tesla are not ignorant about LiDAR from an engineering/scientific standpoint; Musk is, after all, CEO of SpaceX and uses LiDAR for docking spaceships. Everyone can acknowledge that Elon Musk is an excellent businessman and entrepreneur, but many often forget that Elon Musk is literally a rocket scientist and CEO of SpaceX with a background in physics. Musk’s undergraduate studies at UPenn included a double major in Business and Physics, a strange but powerful combination for tech entrepreneurship. In the midst of Musk’s infamous comments against LiDAR at Tesla’s Autonomy Day, many missed an additional statement he made:

“I should point out that I don’t actually super hate LiDAR … the SpaceX dragon uses LiDAR to navigate to the space station and dock. …SpaceX developed its own LiDAR from scratch to do that and I spearheaded that effort personally. Because in that scenario [a spaceship docking], LiDAR makes sense. But in cars, it’s freakin’ stupid. It’s expensive, unnecessary, and as Andrej [Karpathy] was saying, once you solve vision, [LiDAR] is worthless.” (Tesla Autonomy Day YouTube video, 2:34:08-2:34:39, lightly edited)

Musk made a similar point on Twitter:

Unlike other leaders of companies developing autonomous driving, Musk is first and foremost a scientist/engineer who understands LiDAR and who even personally developed a custom LiDAR system at SpaceX -- thus, when Musk rejects LiDAR as unnecessary, his arguments need to be seen from more than just a business perspective (LiDAR is expensive, ugly), but also from an engineering/scientific perspective.

Reframing the Debate from First Principles

Elon Musk operates from first principles: “It’s important to reason from first principles, rather than by analogy. The normal way we conduct our lives is that we reason by analogy. ‘We’re doing this because it’s like something else that was done.’ Or, ‘it’s like what other people are doing.’ . . . It’s mentally easier to reason by analogy rather than from first principles. But first principles is a physics way of looking at the world. And what that means is you boil down things to the most fundamental truths, ‘What are we sure is true, or sure is possible?’ And then reason up from there.” (lightly edited from an interview with Kevin Rose of Foundation.KR on September 7, 2012, https://www.youtube.com/watch?v=L-s_3b5fRd8, 23:12-23:28)

So we must begin with some fundamental questions:

1st: What exactly are we debating?

The car's "vision"

This entire LiDAR vs. cameras debate is fundamentally about the car's sensors -- how will the car "see" the world around it?

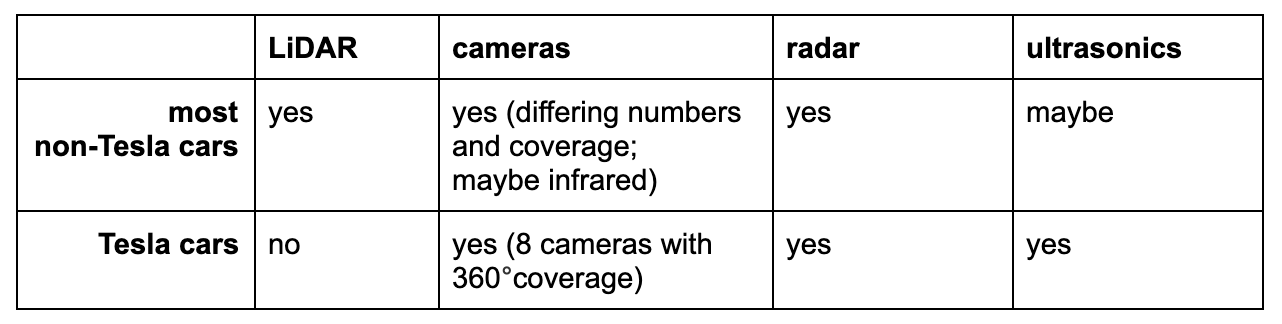

So this is not fundamentally a “LiDAR vs. cameras” debate, which can be seen all over the Internet through a simple Google search. The comparison should be about the full package of sensors each company uses:

In other words, how does Tesla’s full package of sensors (cameras, radar, ultrasonics) compare to other companies and their full package of sensors (LiDAR, cameras, radar, maybe ultrasonics)? That is the debate.

2nd: For "vision," how does LiDAR compare to other sensors?

Each sensor has its own strengths and weaknesses

LiDAR (Light Detection and Ranging)

The fundamental task of LiDAR is for measuring distance. Newer LiDAR technology is continually improving, so that newer LiDARs are gaining camera-like vision in addition to their distance-measuring abilities, but fundamentally, LiDAR was developed for measuring distance. At present, the main disadvantages of LiDAR (mentioned above) are: (1) its high cost, (2) its inability to measure distance through heavy rain, snow, and fog, and (3) its ugliness.

Radar (Radio Detection and Ranging)

Like LiDAR, radar’s fundamental task is for measuring distance, but it uses radio waves instead of light/lasers. The advantages of radar are: (1) its low cost, (2) its ability to measure distance through heavy rain, snow, and fog, and (3) its ability to be hidden from view.

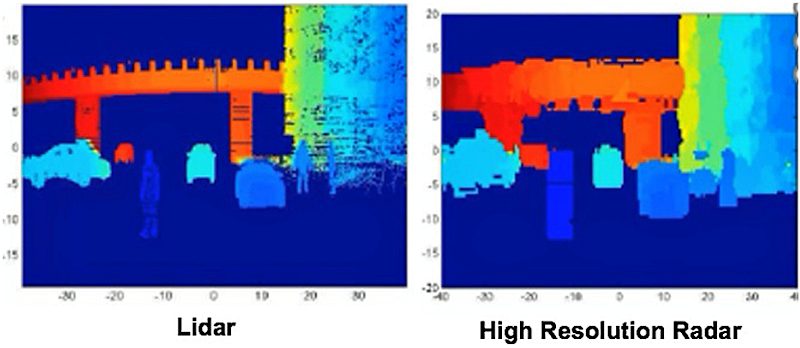

Solely because of radar’s ability to measure distance through bad weather, even cars with LiDAR will still need radar for initial rollout. However, the richness of the data generated by LiDAR is far superior to radar, as can be seen below (although newer radar systems are getting better and better, but at higher cost).

However, even the higher resolution depth information of LiDAR has already become obsolete when machine learning is applied to camera data.

Cameras

Cameras are obviously the most fundamental source of “vision” for the car, but they also have limitations: (1) cameras cannot see through obstacles like fog, snow, and heavy rain, and (2) cameras cannot measure distance like LiDAR since that is not their purpose, although computers can be trained to measure distance through AI and machine learning.

Ultrasonics

LiDAR, cameras, and radar are all poor at detecting objects that are very close, so Tesla uses ultrasonic sensors to help with objection detection at less than 8 meters (= 26 feet).

.png)

*Arbe Robotics is developing high-resolution 4D imaging radar that will improve radar’s perception when compared to older radar technologies. However, the cost and quality of these new radars are unknown at this point.

**unless the cameras can be taught to measure distance via neural networks (as Tesla has done); in such a case, the cameras can be nearly as good as LiDAR at gauging distance.

Clearly, no single sensor is adequate by itself, which is why all companies use a combination of sensors.

Each sensor is (generally) the “best” in one area: LiDAR is best at measuring distance in general, radar is best at measuring distance in bad weather, cameras are best at perception/image recognition, and ultrasonics are best at measuring nearby objects (less than 8 meters/26 feet).

3rd: Is LiDAR necessary for an autonomous car’s “vision”?

No -- if cameras can learn to measure distance

Nearly every company answers yes, except Tesla and the little-known Comma.AI. Nearly all companies value LiDAR because it is the best at measuring distance and detecting obstacles, so LiDAR is believed to be necessary for safety reasons.

However, if a driving computer equipped with cameras, radar, and ultrasonic sensors can be trained to measure distance just as well as LiDAR -- then LiDAR is a redundant and unnecessary sensor (as well as being expensive, useless in bad weather, power hungry, and ugly)

This is the death knell for LiDAR. If distance and perception can be achieved with enough data, there is nothing that LiDAR could do that cameras could not. Tesla has achieved this with its full-self driving computer (FSD) and through deep learning and AI. This achievement has been explained in multiple places:

- Andrej Karpathy, director of AI at Tesla, explains how measuring depth without LiDAR has been achieved during his Tesla Autonomy Day presentation (2:16:46-2:25:08)

- Multiple engineers, including one that sold his company to Tesla, authored this academic paper: “Replicating Lidar Point Clouds with Deep Sensor Cloning”

- Derrick Mwiti has written, “Research Guide for Depth Estimation with Deep Learning”

4th: What is necessary for autonomous driving?

"Vision" AND "Brains"

Vision alone (through sensors) is not enough for autonomous driving. A car could have the best and most expensive sensors in the world, but it cannot drive itself unless it has a well-trained “brain” to interpret what it is “seeing” (perception/image recognition) and to decide “how to respond” (artificial intelligence).

In other words, sensors feed data to the driving computer for interpretation. After having fed data to the computer, sensors have served their purpose. The quality of the car’s autonomous driving now depends on the quality of the computer’s perception and artificial intelligence. Sensors are the first step, but not the final step in autonomous driving.

We can compare this to two drivers: (1) a teenager with 20/20 vision with no driving experience, vs. (2) a 60 year older with 45 years of driving experience, who is in need of new glasses and is driving to the eye doctor. Will the teenager drive better? Will the teenager’s vision advantage lead to better driving than a 60 year old with weakened vision? Of course, at some point the 60 year old’s vision could be so bad that he cannot drive safely. But the point is: Does perfect vision lead to perfect driving?

No. Proper interpretation of situations and driving experience can be more important than perfect vision.

This is where Tesla’s advantage is strongest -- its data gathering and its AI machine learning. Tesla has trained the “brain” of its cars to be far superior to its rivals. Even Oppenheimer financial analyst Colin Rusch recognizes this:

“While we continue to have misgivings about risks related to TSLA not incorporating LiDAR into its vehicles yet, we believe the learning cycles enabled by having over 1 [million] vehicles on the road is an extraordinary advantage.” (note to investors)

An autonomous car with perfect vision is unfit for the road if it does not have a well-trained “brain” to interpret what it sees and to know how to properly respond. And such a brain can only be trained through large amounts of varied and real world data.

5th: What is required for an autonomous car to have the best “brain”?

Data, large amounts of varied and real world data

An autonomous car must be trained with . . .

- A large amount of data because AI gets smarter the more data it is fed.

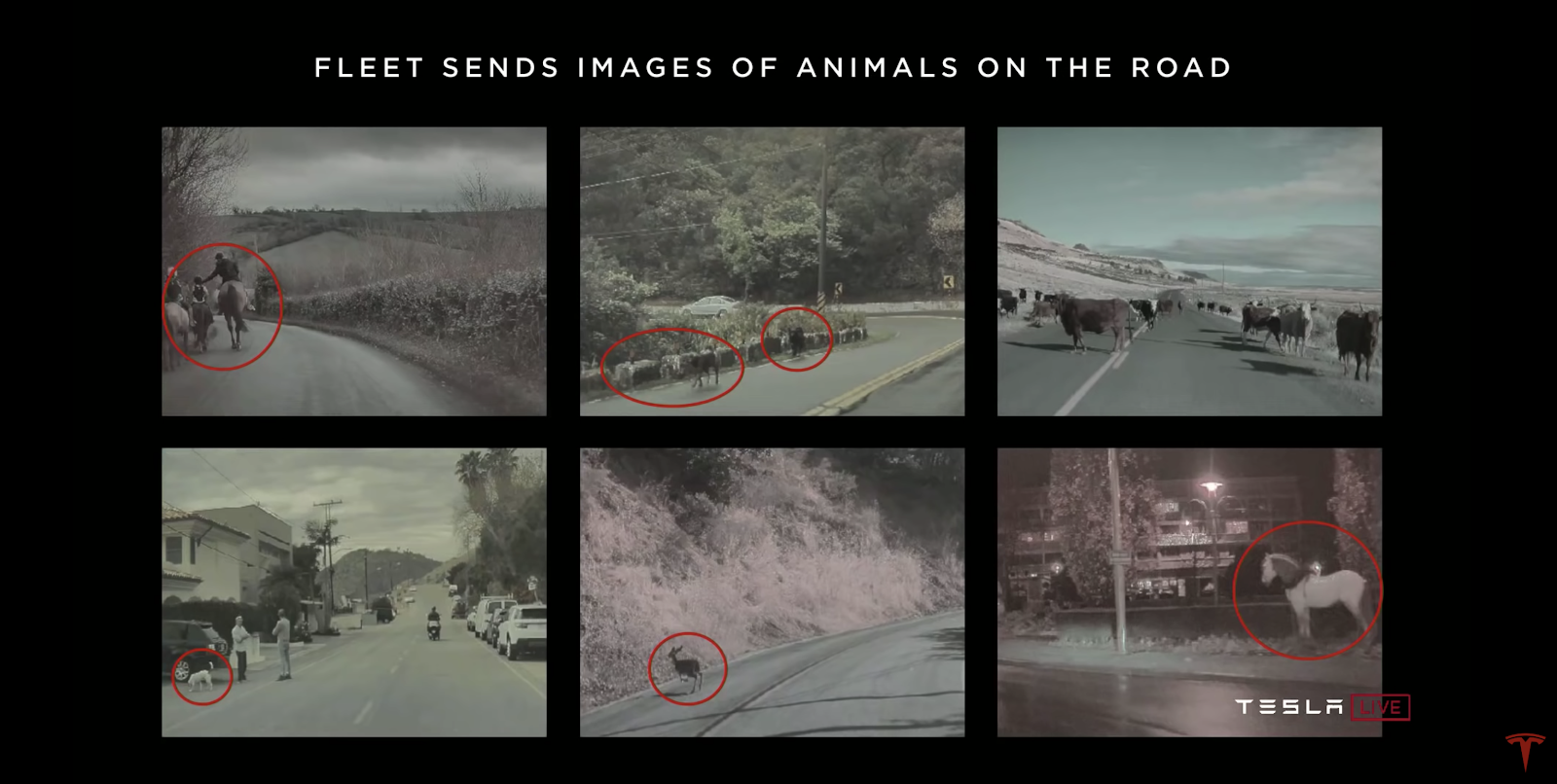

- Varied data because the car must learn to deal with edge cases, extreme situations. Just driving around in circles will accumulate "miles driven," but that experience is useless. Or, only learning from freeway driving is insufficient to train the car's brain for driving on busy city streets with lots of pedestrians, dogs being walked, and bicyclists.

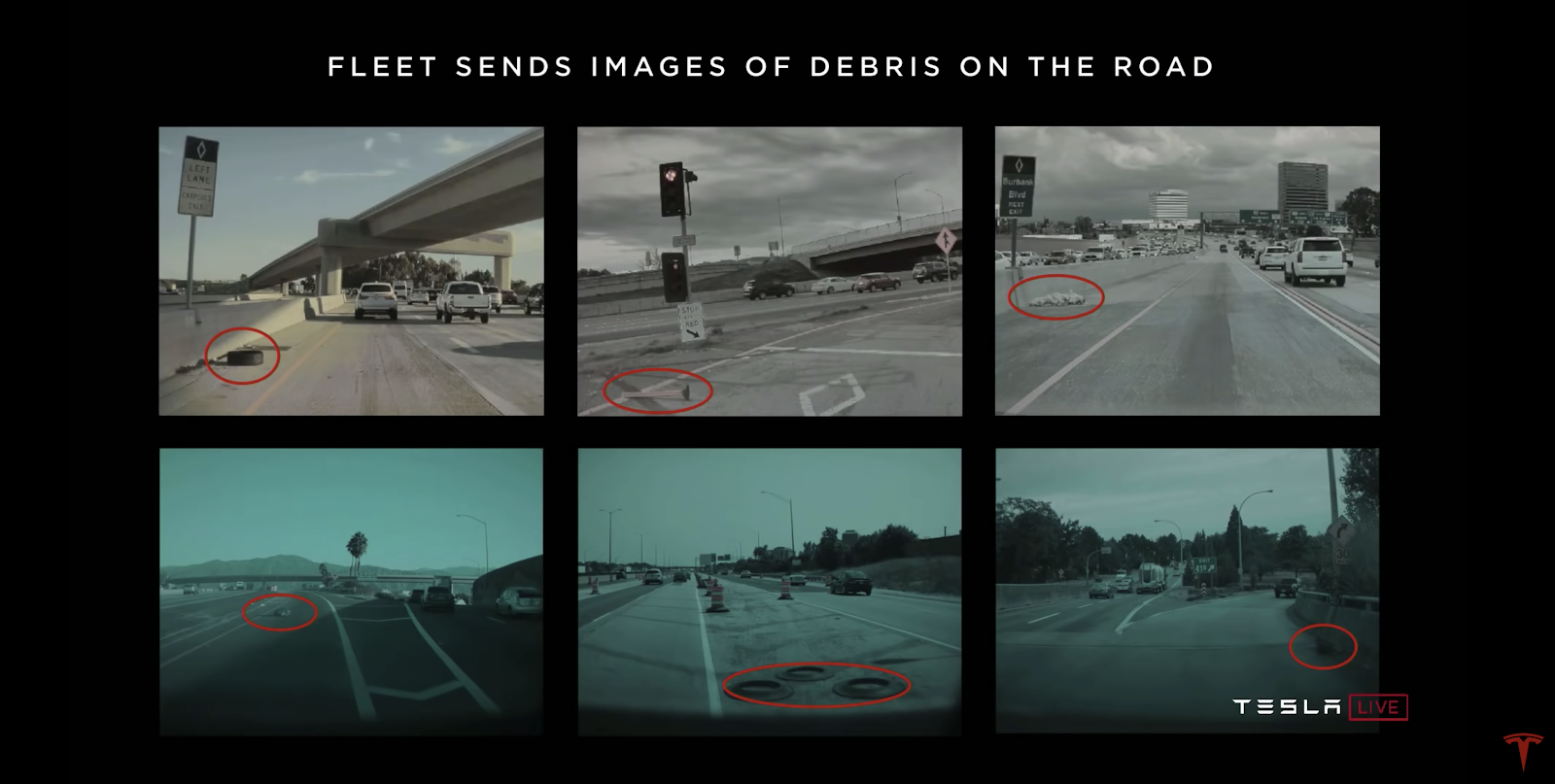

- And real world data (rather than just simulations) because the real world is "crazy" and human engineers simply can't imagine all the scenarios a car might encounter in the real world. As Elon Musk has said, "In a simulation, you are fundamentally grading your own homework. If you know you're going to simulate it, you can definitely solve for it. But as Andrej is saying, you don't know what you don't know. The world is very weird and it has millions of corner cases" (Tesla Autonomy Day, 2:04:40-2:05:15). We cannot know all those millions of corner cases unless it can be gathered from cars that drive in the real world (see two pictures below).

Tesla’s data advantage is unparalleled because Tesla draws data for free from its existing fleet of one million+ cars. No other company has such vast amounts of real world data.

And other companies are not even willing to admit their data disadvantage. Waymo’s autonomous cars can work well in a highly controlled environment that is already pre-mapped (like their robotaxis in Phoenix, Arizona), but that does not solve the “brain” problem. An obsessive focus on LiDAR handicaps Waymo, except in the most ideal of controlled and pre-mapped environments.

Thus, autonomous cars with LiDAR must use the same methods as Tesla to achieve full autonomy, namely, data gathering which leads to image recognition and AI processing to make good and safe driving decisions. What is really necessary for safe autonomous driving is not LiDAR; what is ultimately necessary is the data to handle the 0.00001% of extreme edge cases that could mean the difference between life and death. Situations like construction sites, animals, and debris on the road or debris flying in the air.

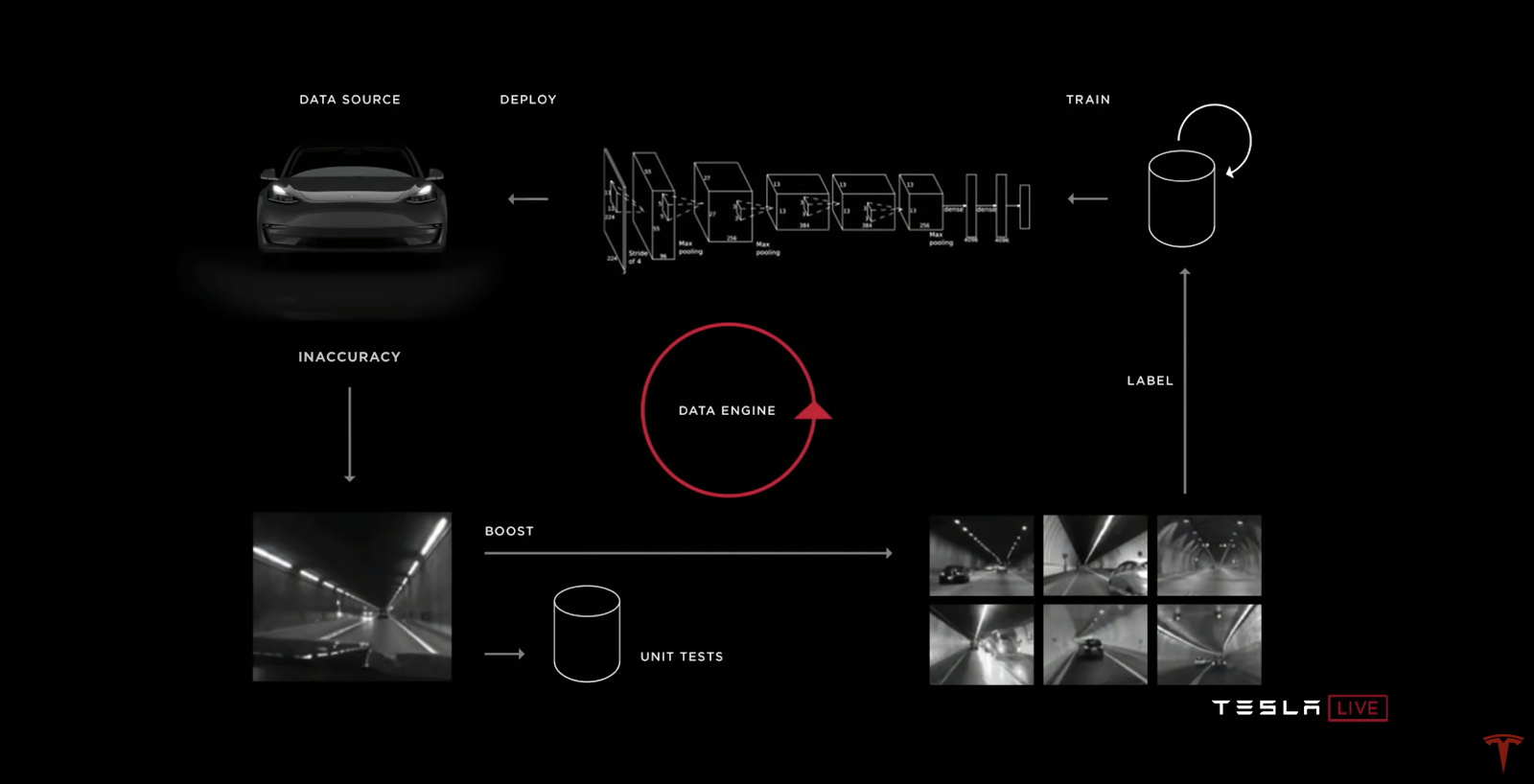

But even with all such data, the car’s driving computer still needs to be trained and its software updated continuously -- which is exactly what Tesla does with its iterative data engine:

Conclusion & Summary

- By rejecting LiDAR, Tesla is focused on training a superior "brain" through data gathering

- By rejecting LiDAR, Tesla will solve autonomous driving at a lower cost, with lower power consumption (hence giving the car greater range), and with greater safety by training its cars to handle the 0.00001% of extreme and dangerous edge cases.

- And the only way such a "brain" is fully trained is through collecting a large amount of varied and real world data.

- Only Tesla has such a data advantage because its existing fleet of one million+ cars feeds Tesla a large amount of varied and real world data.

Takeaway: Superior "eyes/vision" (through LiDAR) does not solve autonomous driving; Tesla's superior data gathering through its fleet will help it train a superior "brain" for interpreting what it sees and making safe driving decisions in the 0.00001% of extreme and dangerous situations

Updates:

May 25, 2021: Tesla has begun rolling out pure vision, no longer relying on radar

.png)

.png)